January 23, 2026

How to Spot Content Gaps in AI-Generated Answers

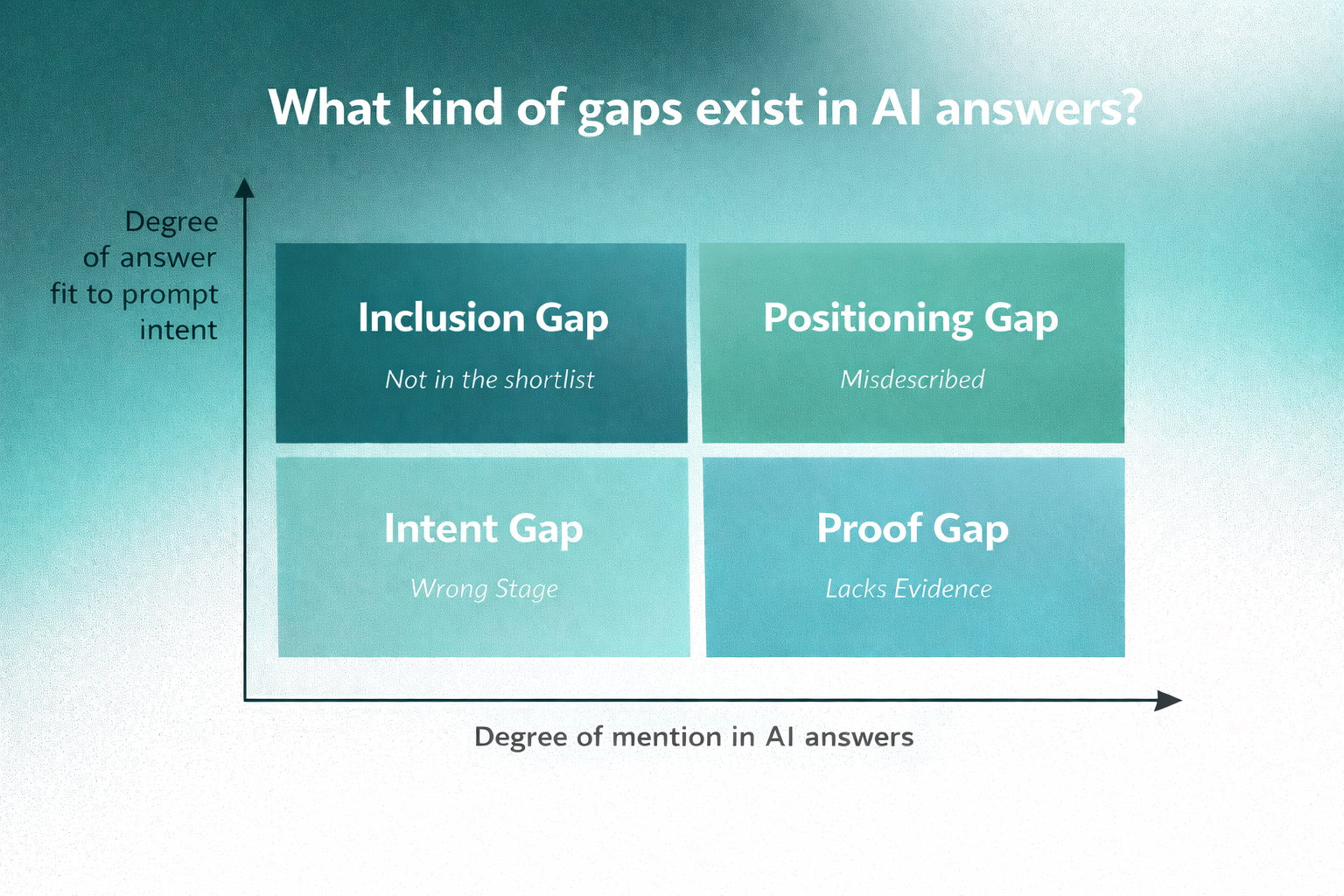

AI search visibility changes content gaps from missing keywords and pages to missing inclusion, positioning, proof, and decision-ready answers that AI can confidently summarize.

When customers ask AI a question, the response usually arrives packaged as a decision. By the time the customer scrolls down the page, their purchase options have been framed.

That’s the practical meaning of AI search visibility. It’s about whether your brand appears in answers that actively shape customer consideration before they even reach your site. It requires a step up from traditional content gap analysis that was built for a world where clicks meant the start of the funnel.

The good news: the standard playbook still holds true. Most marketers we’ve spoken to still compare competitor coverage, identify missing topics, and expand or refresh content to capture intent. What’s different is the interface that content now appears in - and how to spot the gaps in it.

Why AI search visibility changes what a content gap looks like

This difference arises because AI answers are fundamentally different from search engine page results. Documentation for AI-powered search experiences describes a “query fan-out” approach, where the system issues multiple related searches across subtopics and data sources, then identifies additional supporting pages while generating a response.

In other words, the model is assembling a category narrative from what it can retrieve, trust, and summarize cleanly. A synthesis of various answers.

There is also growing evidence that AI answers change click behavior. One large-scale analysis of 300,000 keywords found that the presence of AI-generated summaries in search results correlated with a 34.5% lower average clickthrough rate for the top-ranking page compared to similar informational keywords without those summaries. If fewer clicks happen, your website has fewer chances to correct misconceptions, sharpen differentiation, or win the comparison later.

This is why content gaps are no longer only missing topics and missing keywords. Increasingly, the expensive gaps are missing explanations. Some mainstream SEO guidance now explicitly treats “prompts your brand does not show for” as part of content gap analysis, alongside traditional keyword gaps. Focus is shifting away from search queries to specific questions, and the competition is also moving from page-level visibility to answer-level visibility.

One important nuance: AI systems can be highly inconsistent when recommending brands and products. The same prompt, run repeatedly, can produce different shortlists, different ordering, and different levels of confidence. That means you should avoid diagnosing gaps from a single screenshot and instead look for patterns that repeat across multiple runs, multiple prompts, and multiple engines.

How to read AI answers and spot the gaps quickly

A practical way to think about gaps in AI search visibility is to begin with these questions in mind:

- Are you included in shortlist moments, especially comparisons, “best tools,” and “alternatives” prompts?

- If you are included, are you described correctly in plain language, or flattened into generic category language?

- When the answer compares options, are you evaluated on criteria you want to win on, or on someone else’s framing?

- Finally, does the model have enough defensible material to state your differentiators confidently, or does it hedge because it cannot justify the claim?

These questions should provide you with a quick diagnostic exercise that expands your assessment beyond brand presence to also include positioning and proof. In our experience, these checks help surface four common gap patterns that keyword tools often miss.

The first is an inclusion gap. This occurs when your brand does not appear in answers where it should be an obvious option, especially in prompts like “best for”, “alternatives”, or “X vs. Y”. Beyond rankings, this problem often arises when AI assistants fail to find enough clear and consistent information about your brand.

The second is a positioning gap. In this scenario, your brand is mentioned but the accompanying description is fuzzy or significantly inaccurate. AI assistants may have pegged you to the wrong bucket, or explained your brand too generically. Our observations suggest that your brand messaging may not be clear enough to be summarized in one sentence.

The third kind of gap is a proof gap. Here, AI assistants mention your brand, but avoid your strongest claims or tiptoes around them with cautious language. This is a strong signal that AI can’t find evidence that it can reliably repeat. If you want the answer to be more decisive, you need more “show” and less “tell” in the content it can draw from.

Finally, the fourth is an intent gap. Your brand appears in broad, educational answers, but disappears when the question approaches a decision. This often shows up around pricing, implementation effort, switching costs, risk, or requirements. In other words, you have content for learning, but not enough content that edges closer to the purchase decision.

Turn AI search visibility insights into action

Once you’ve identified the nature of the gap your brand is facing in AI answers, the next step would be to translate them into actionables that can be repeated at scale.

Inclusion gaps usually call for shortlist assets, such as comparisons, alternatives pages, and “best for” breakdowns.

Positioning gaps call for clearer category language and consistent messaging across the pages most likely to be retrieved and summarized.

Proof gaps call for evidence surfaces, such as case studies, benchmarks, methodology pages, and implementation stories.

Intent gaps call for direct bottom-funnel answers, such as pricing explainers, onboarding guidance, and objection handling content that is honest about tradeoffs.

It is here that we believe AI-led analysis can really strengthen traditional content gap analysis. Instead of filling content gaps purely based on page rankings, the focus shifts towards gaps that repeatedly cause your brand to be missing, misdescribed, or cautiously framed in AI answers. That’s not to say that page visibility is no longer a concern - it still is, but now you’re also optimizing for how AI answers interpret and repeat your message at scale.

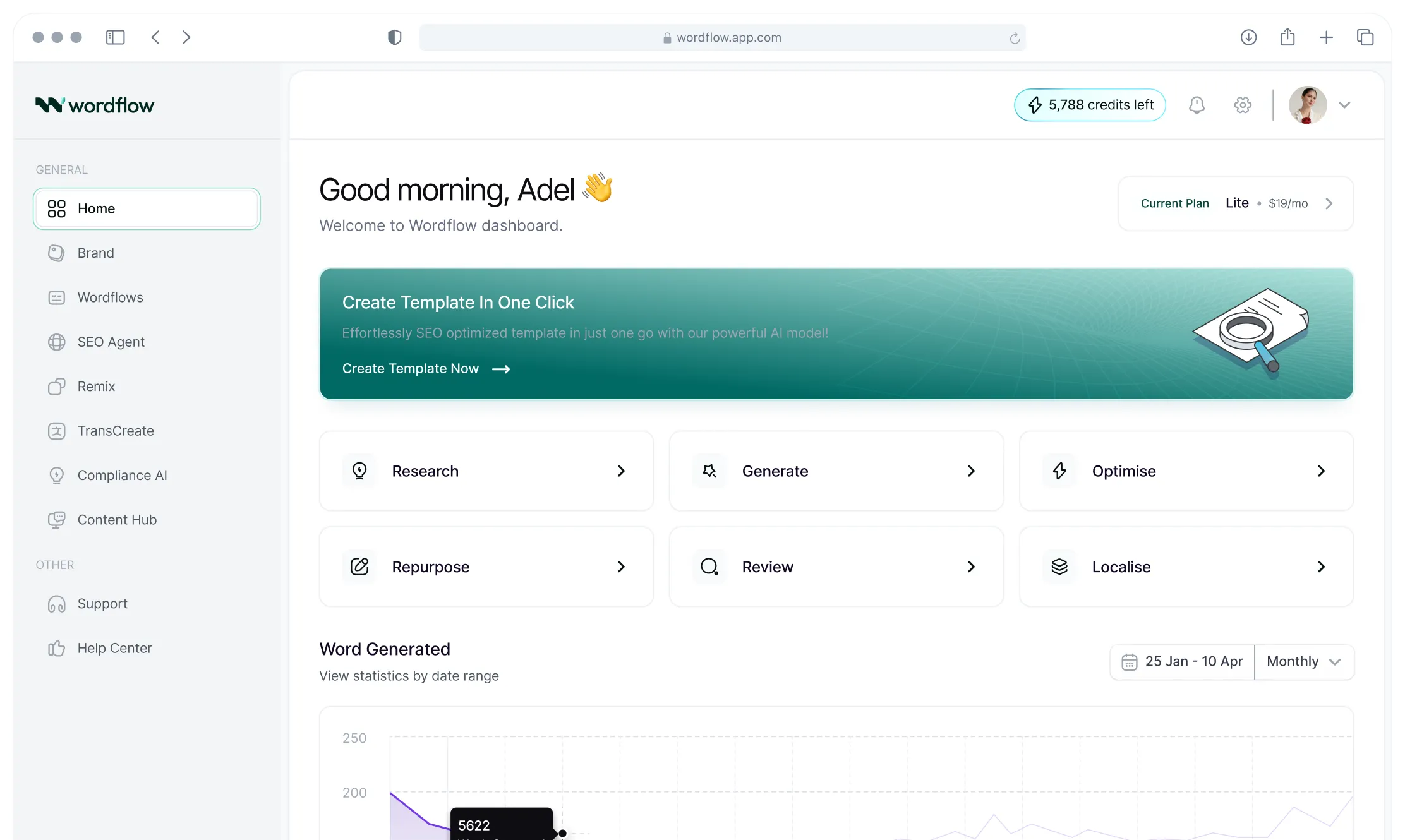

Close the AI search visibility loop with Wordflow

Ultimately, if AI can’t find a clean, clear-cut way to describe your brand, it will borrow a different framing; if it can’t find proof, it will hedge.

That is why manual workflows break. Prompts and screenshots can surface patterns, but the work quickly turns into spreadsheets and one-off decisions. The bottleneck is turning insight into a repeatable shipping plan with guardrails.

Wordflow closes that loop from end to end: start with a brand profile that makes messaging consistent, track AI search visibility across key metrics like share of voice and sentiment, and automatically create on-brand, compliant content that fits right into any channel with our GEO Writer.

To see it in practice, try Wordflow: run an intent-based prompt set, sample it across engines and repeats, identify where your brand is consistently missing or misframed, then ship the pages, proof, and comparisons the answer layer can reuse with confidence.

Read More

Artifical Intelligence.

Real Results

Ready to transform how you market? Start your unlimited free trial today and experience the advantage Wordflow brings to your results.

.webp)