February 4, 2026

How to Build a Living Content Roadmap Using AI Response Data

Use signals from AI answers to dynamically inform what content your team should publish.

When customers ask AI a question, the response often arrives as a well-framed answer that motivates quick decisions over mulling options further.

This already has implications for content that marketers put out: beyond ranking for keywords, the challenge today is threefold: (1) getting included in AI answers, (2) being described correctly in AI answers, and (3) having your claims supported by proof that answer engines can cite when shaping AI answers.

This is also why static planning cycles have become less reliable now. Content cited by AI can still be evergreen and comprehensive, but the way AI experiences assemble and cite answers changes faster than a static roadmap can track.

The answer to this problem is a “living” content roadmap that is capable of turning AI search tracking into immediately actionable decisions. It should operate like a feedback loop: convert what AI answers are saying into shippable output, measure brand mentions in AI answers, and increase the probability of your brand being included and correctly represented in those answers.

This is the bridge between our earlier "spot the gaps" framing and an actual operating system. In our earlier writeup on how to measure your brand presence inside AI conversations, we defined the gap patterns that matter. Here we tighten the next step, which is the gap from hypothesis to asset and acceptance criteria.

Capture AI answers as comparable data

First off, AI search tracking data is only useful if it is comparable over time. For each tracking run:

- Brand inclusion: capture whether you are included in AI answers

- Brand description: how AI describes your brand

- Attributable claims: What claims are made about your brand and what evidence the AI answer uses.

- Entity correctness: whether theAI answer confuses your brand with similarly named brands, attributes competitor features to your brand or mis-states your brand’s category.

- Variants: what has shifted in AI answers since the last run.

What about uncertainty markers? We recommend simply treating this as a diagnostic signal as hedging language can reflect the model’s style or genuine uncertainty as much as content quality.

On the last point, it is helpful to initially define variants so that you have a baseline criteria to decide what kind of change is meaningful to track. A workable definition could be:

one prompt wording (a paraphrase of the same intent) times one AI platform or experience (search and chat experiences) times one run window (for example, this week's run).

A quick caveat: Always measure the customer experience rather than API outputs. Developer API outputs may differ from what buyers see in consumer interfaces, because consumer products can add additional - retrieval, grounding, citation among others - layers that are not replicated by a single API call.

Move from gap diagnosis to shippable hypotheses

Once you have consistent rows of tracking data, you should tag each underperforming prompt cluster with one primary gap type so the fix is unambiguous. We previously outlined four types of gaps in AI responses: inclusion, positioning, proof, and intent.

Then, translate each gap type into an asset hypothesis. We use the term “hypothesis” here as the point of doing this is to place a “first bet” that most plausibly affects what AI platforms retrieve, summarize, and validate with change over time. Some asset hypotheses that come to mind:

- Inclusion gaps: decision-ready pages like use-cases plus alternatives or comparisons.

- Positioning gaps: a clear category page plus "what we are / who we're for" narrative blocks that are easy to summarise.

- Proof gaps: a methodology page and structured proof like benchmarks, constraints, and case studies.

- Intent gaps: purchase-adjacent content that answers what buyers ask when decisions get real, such as pricing ranges or pricing logic, risks, and "how to choose" guidance.

A practical default playbook still helps, as long as you treat it as a starting point.

The challenge is in trying to diagnose what the problem is when a hypothesis fails. At Wordflow, we’re working on ways to infer this via citation audits and crawlability tests - stay tuned for a follow-up on how to effectively tell different failure modes apart.

Make acceptance criteria measurable

Naturally, tagging requires consistent sampling. Google AI Overviews, according to Ahrefs, have an average persistence of 2.15 days - meaning, the content in these overviews change roughly every two days. Monthly checks can miss meaningful drift, especially in what gets emphasised and what gets cited. Research shows that even small prompt perturbations can change an LLM's output, which is why a single run isn’t meaningful.

To ground this exercise, we’ll need acceptance criteria. Acceptance criteria should be measurable against your defined variants. Below are two examples of how you can define acceptance criteria:

- "In Cluster X, move from not mentioned to top-three mention in at least 30% of variants," where variants are explicitly defined as eight paraphrases times two AI platforms times two weekly runs.

- "Increase attributable proof on Differentiator Y in at least 40% of variants and gain at least one citation from an agreed authoritative domain set."

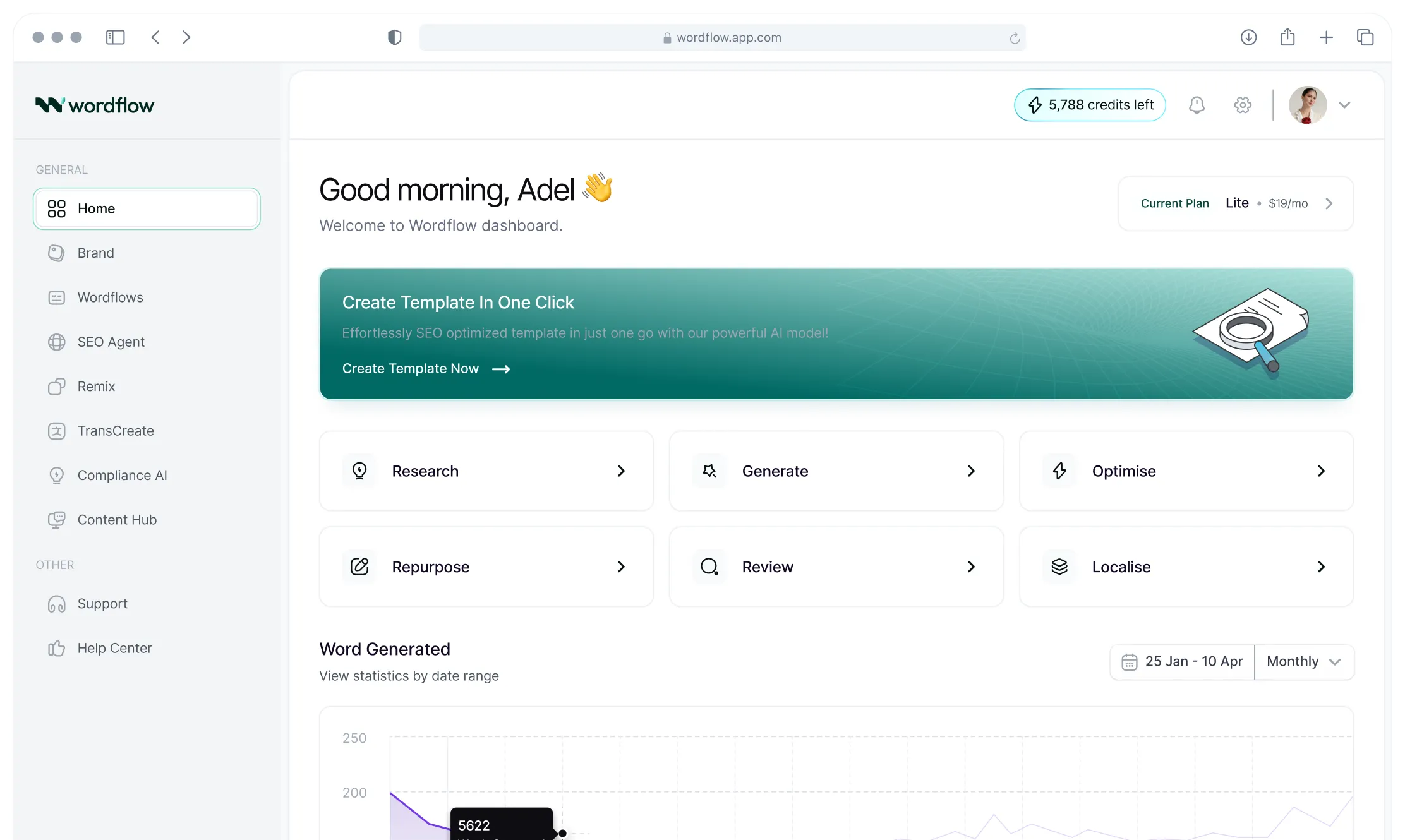

Wordflow operationalises this loop end-to-end: AI Search Campaigns define what you track, AI Visibility Reporting captures the rows, and AI Visibility Pulse surfaces change over time, so your roadmap updates from real answer behavior without becoming chaotic.

Read More

Artifical Intelligence.

Real Results

Ready to transform how you market? Start your unlimited free trial today and experience the advantage Wordflow brings to your results.

.webp)